Guide to Verification and Validation

“If you don’t have time to do it right, when will you have the time to do it over?” John Wooden, legendary UCLA basketball coach

At PassiveLogic, a key component of our product evaluation process is asking ourselves, “Have we met the objectives of the original design?” If we develop tools that enable people to solve problems in an elegant and straightforward way, then we’ve done our job. If not, we go back to the drawing board.

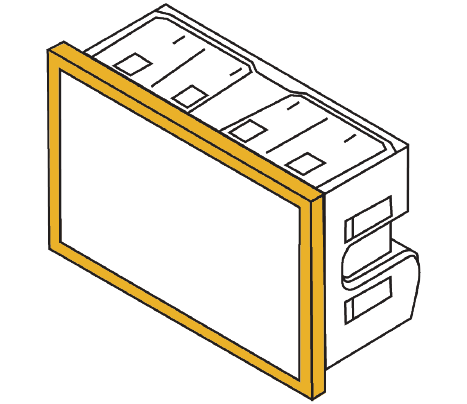

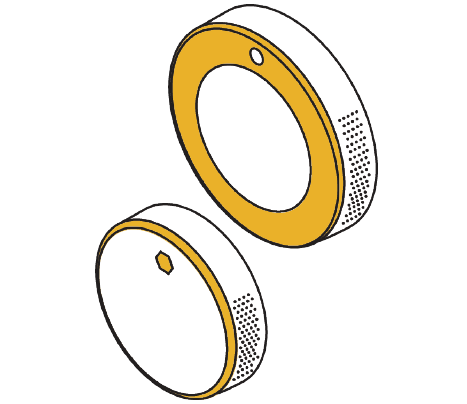

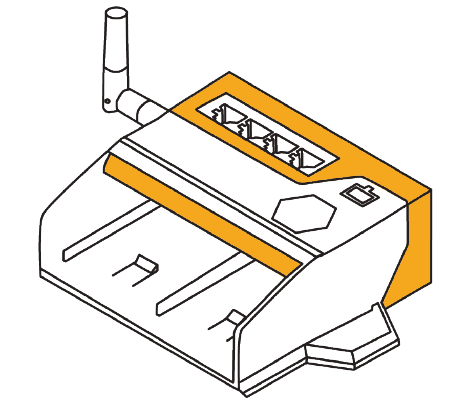

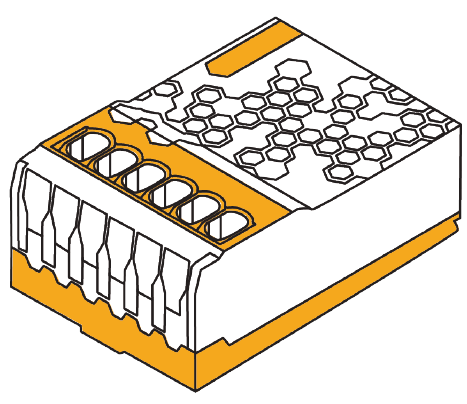

The tool we’re building is a fully autonomous platform of hardware and software products that solve the all-too-common pain points that the building controls industry faces today. The platform aims to eliminate the negative aspects of siloed proprietary systems, outdated technology, and fixed programming, all the while optimizing for energy efficiency and occupant comfort through generative autonomy.

Generative autonomy enables edge-based, real-time decision making via AI-powered controls generated using physics-based digital twins. This is game changing for the market: we’re upping the ante by developing an entire end-to-end ecosystem that combines the best of BIM, generative design, and differentiable computing.

Building a platform that can deliver on its promise and be adopted by a typically risk-averse industry is tough. But like John Wooden famously said, “If you don’t have time to do it right, when will you have the time to do it over?” It requires a lot of planning, developing, testing, and then going back to square 1 if we don’t have things exactly right. A fundamental part to making this possible is our Verification and Validation team, which acts as an evaluator to the rest of our engineering teams.

A fully-fledged testing operation

Our team members in India: Islam Ahmad, Paritosh Kumar, Raj Kumar Singh, Sulekha Malik (left to right)

We call our team the Verification and Validation team because “testing” on its own doesn’t quite cover the complexity of this process. Verification should answer the question “Are we building the product correctly?” On the other hand, validation answers “Are we building the right product?” Our goal is not only to meet, but also reset customer expectations. Our stakeholders aren’t familiar with autonomous control, so we must prove to the market that our AI is safe, robust, and easy to use, so that people make the leap to adopt it.

To take full advantage of the benefits of a complete testing process, we’ve invested in a dedicated Verification and Validation team so that we can bring specialized expertise straight into our offices. With a team that is not involved directly in the development process, they’re better able to approach products objectively, which helps them to identify issues that may be overlooked by those who are familiar with the implementation.

To cover the entire testing process, we’ve divided the V&V team into several test groups:

Software Testing and Q/A: This group tests all embedded systems through unit testing, integration testing, and system validation testing.

Hardware Test: This group tests the physical and mechanical capabilities of our products, as well as the accuracy and precision of all sensors.

User Software Test: This group designs test cases as per the specific requirements of our user software applications, and then validates it through all phases of testing. They perform daily sanity testing on every nightly build, and report those results to the stakeholders. They act as a gatekeeper, providing the final sign-off on testing before every release.

Applied Systems: This group designs field tests to see how our products fare in the scenarios they’re designed for. They also ensure that all pieces of the puzzle come together through “Integration testing”. One product might work well by itself, but it is vital that the entire platform works well.

As a whole, the Verification and Validation team possesses expertise across testing methodologies, techniques, and tools. Our team members are skilled in designing comprehensive test plans, creating test cases, and executing various testing activities. Their collective experience across software and hardware enables them to uncover potential issues and ensure the products meet the required quality standards. “We’ve been lucky to have hired a whole lineup of incredible test engineers, each of whom brings their own set of highly sought-after skills,” John Easterling, Director of Verification and Validation, remarked. “Having this dream team means that we have people who allocate their time and resources to thoroughly testing the software, and thus provide the utmost safety and security of data for all of our users. This leads to higher customer satisfaction and a seamless user experience.”

Designing the ultimate testing process

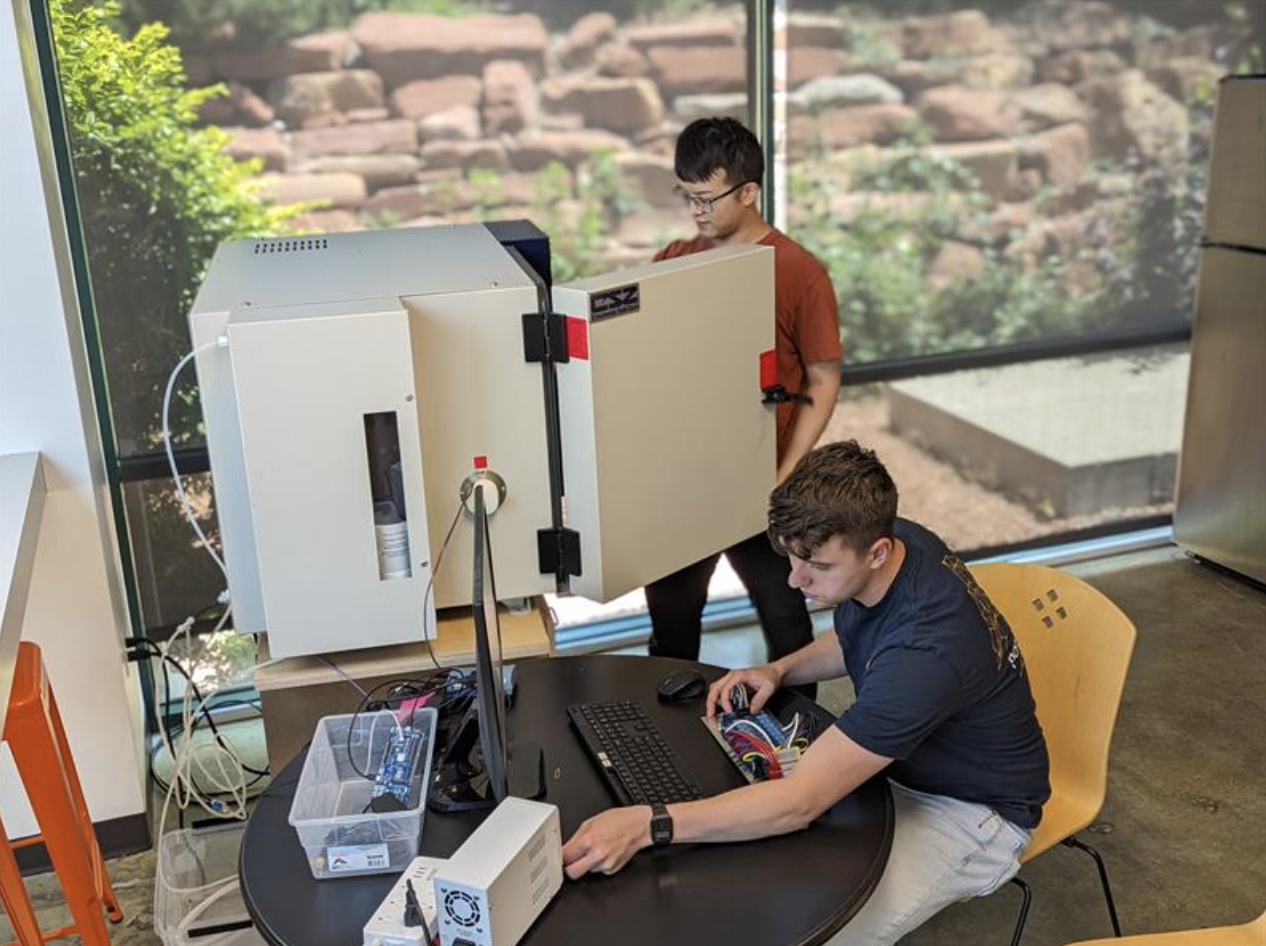

Xingsheng Wei and Christian Graff (left to right)

We undertake a Design-Build-Execute strategy when we carry out our testing. The first step is communicating with the design and product management team, who are primarily responsible for creating the detailed technical specifications needed for each product release and aligning them to market needs. After we’ve met and discussed the product requirements, we design a test plan that consists of individual test and test fixture definitions. We then build the rigorous tests and “fixtures” or “jigs” that can successfully carry out the tests. Then we execute. These test suites verify product architecture, implementation, and functionality against those initial requirements.

The name of the game is to break the product every way we can, so that we can ensure that the user will never have to experience those breakages themselves. Like Cory Mosiman, Applied Systems Validation and Software Engineer, often says “They make it, we break it.” While these tests are customized by each speciality test group, we always ask ourselves a long list of key questions throughout the process that might include:

Does the product perform in an any unexpected ways?

Does the product achieve our objectives?

How does the product perform in a virtual environment, and does that track to how it behaves in the real world?

If it does achieve the objectives, to what degree, and in what ways does it succeed?

If the product fails the test, to what degree, and why?

Why do we perform validation and verification tests?

In order to disrupt an industry, carefully designing and carrying out a battery of tests is necessary to provide a truly effective product. Our User Software Test Lead, Paritosh Kumar, who previously led testing efforts at Johnson Controls, Aptara, and IBM, is a huge proponent of pushing the product to be as effective as possible. “All of these processes, though lengthy, contribute to the development of robust, high-quality systems, products, and models,” he explains. He expands on the main benefits to a fully-fledged testing process below:

Error Detection: By subjecting the system to different scenarios and inputs, we’ll uncover potential issues, bugs, and flaws that may arise during real-world usage. This allows for debugging and refining the system before it gets deployed.

Performance Evaluation: We can measure accuracy, precision, recall, speed, and efficiency, to determine how well the system or model performs under different conditions. This evaluation helps make informed decisions about its readiness for deployment or further improvements.

Risk Mitigation: Perhaps the most crucial part of the process is that by identifying and fixing issues, we reduce the chances of encountering problems in the field. This helps minimize potential financial losses, reputation damage, or safety hazards that may arise from using an untested system.

Iterative Improvement: By collecting feedback and insights from the testing phases, we can make necessary adjustments, optimize performance, and enhance the overall quality of the system or model.

Failure is not an option

In the end, our goal is to make sure that our product works for each individual user and they have an extraordinary experience in every interaction they have with our products. Cory Mosiman, who designs and builds field tests for our products, previously worked at the National Renewable Energy Laboratory where he was key contributor to the Alfalfa project. His valuable experiences in applied research have given him clear understanding that when it comes to bringing AI to the industrial world, there are a lot of risks, costs, and operational maintenance involved. He likes to think of NASA Flight Director Gene Kranz, who famously said “failure is not an option” in regards to the Apollo 13 moon landing. “During the space race, getting into space was a new concept where they needed to define testing procedures to ensure that the launch would be successful,” Cory explained. “We might not be going into space, but we’re applying that same methodology to making sure that our product is completely safe, completely vetted.”

Tests are designed using the appropriate apparatus to simulate use cases as similar as possible to the real world. For example, to validate a device like PassiveLogic’s Sense Nano, we vet the hardware through environmental limit tests in a controlled temperature / humidity chamber and measure how our sensors perform against reference sensors across the operating range to check if our product performs at high accuracy. “Every time we design our tests, our goal is to check — is the product safe? Is the product stable? Does it meet our documented specs? And does it meet the client’s needs?” Christian Graff, Hardware Test Engineer, said.

Everything is driven by the design. “If the product doesn’t meet the objectives we’re not afraid of sounding the alarm and bringing the news to the designers. We work closely with the development teams to provide feedback on how they will need to refine the product to make sure it meets PassiveLogic standards,” says John Easterling. This feedback can seem critical, but meeting our original requirements is crucial in guaranteeing an excellent, consistent product.