Intro to Differentiable Swift, Part 0: Why Automatic Differentiation is Awesome

A Trillion Times Faster

What if code could run, not just forwards, but backwards too? That was Edward Fredkin’s dream of reversible computing. While automatic differentiation isn’t exactly reversible computing, it’s a close proxy for solving the large number of computing problems that take the form of: “I have the answer… what was the question?” (aka “I know what I want to come out of this function, but I don’t know the inputs that will produce those outputs”). These problems are numerous and incredibly varied, ranging from AI and machine learning, optimization, regression, probability, financial systems, physics, biological systems, chemical analysis, automation, 3D rendering, and video games.

Swift is the first programming language with first class compiler support for auto-diff. It will change the way you think about programming: what’s possible, what’s intractable, and how you can build blazingly fast solutions to problems that have defied programmers for decades.

Many of the well known problems in computing and the study of algorithms start with high dimensionality. To get an answer to a given function, you often must first resolve a large number of input parameters. In neural nets for example, you can easily have 10 million parameters that must first be tuned, in order to get a meaningful result on a single output. Resolving the right 10 million parameters to get the right answer can be intractably expensive. It would take 100,000 years for the largest supercomputer to solve this problem through an exhaustive search of the variable space. But if you compute the opposite: by adjusting the one output, “return for me 10,000,000 inputs”… now you can solve the same problem in minutes!

Your Differentiable Future

This powerful concept called automatic differentiation is at the foundation of the deep learning revolution. It turns many exponential time solutions, into constant time solutions. It’s what makes popular libraries like TensorFlow and PyTorch possible. And yet, it has nothing to do with deep learning or neural nets at all. It’s a general concept that we are just at the frontiers of exploiting, that until recently, has been buried deep in neural net libraries.

Code is Math

You’ve written functions that take inputs, do some math, and produce outputs. Graphics, animation, physics simulation, image processing, neural networks. Every codebase has functions that do math. They’re everywhere.

Same old code… now with derivatives!

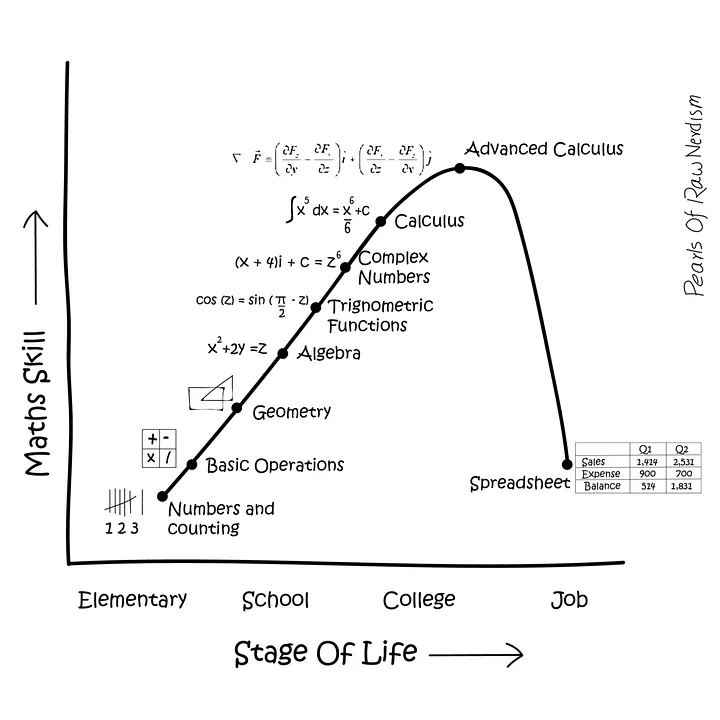

Remember calculus class in high school? Barely? If you are like most people, it’s likely you haven’t tapped that memory for a while. And there’s a reason. Computers don’t care about calculus, they are fundamentally algebra machines. Until now.

Auto-diff is a computational method of automatically generating the derivative of your code. You write whatever code you want, and the compiler will generate the standard version, as well as the derivative function of the same code. Pretty cool, huh? Calculus is back, baby.

In computing there are three basic types of derivatives: symbolic, numeric, and programmatic. Symbolic math is the stuff of chalkboards and classrooms, good conceptually but gets problematic to model in real-world noisy data. Numerical solutions are the typical go-to in algebraic solvers, but they are expensive, often inaccurate, and only suited to back-propagation… auto-diff’s less efficient cousin. The kind of derivatives we are talking about in this article are computational derivatives, where the compiler walks the code execution backwards to produce a reverse derivative. This is what auto-diff does. This is both accurate, fast, and deals with real-world noisy data… and provides exactly what we need to perform the basic maneuver of this algorithm class: gradient descent.

Making Code Differentiable

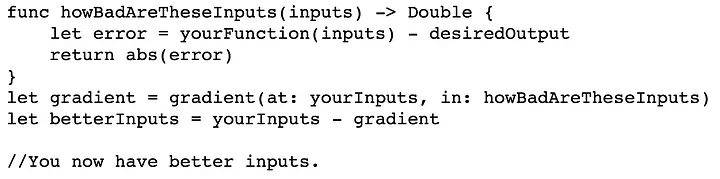

Making a differentiable function in Swift is very straightforward. You can optimize yourFunction in a few lines of code:

This is Gradient Descent. You can only use Gradient Descent if you can get derivatives to the inputs of your function. If you are using a language with Automatic Differentiation (like Swift), getting the derivatives is as easy as the gradient(at:in:) call above!

And that’s why Automatic Differentiation is Awesome! We’re done here!

Well, if you want to know a little more, continue on to part 1 :)